cross-posted from: https://hexbear.net/post/4742235

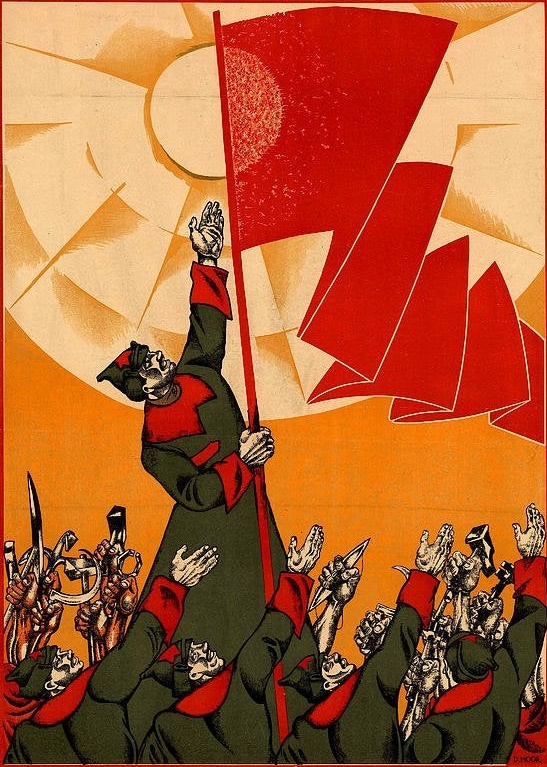

Democratization of Capitalist Values

Democratization is a word often used with technological advancement and the proliferation of open-source software. Even here, the platform under which this discussion is unfolding, we are participating in a form of “democratization” of the means of “communication”. This process of “democratization” is often one framed as a kind of universal or near universal access for the masses to engage in building and protecting their own means of communication. I’ve talked at length in the past about the nature of the federated, decentralized, communications movement. One of the striking aspects of this movement is how much of the shape and structure this democratization of communication shares with the undemocratic and corporate owned means of communication. Despite being presented with the underlying protocols necessary to create a communication experience that fosters true community, the choice is made instead to take the shape and structure of centralized, corporate owned speech and community platforms and “democratize” them, without considering the social relations engendered by the platforms.

As Marxists, this phenomenon isn’t something that should seem strange to us, and we should be able to identify this phenomenon in other instances of “democratization”. This phenomenon is what sits at the heart of Marxist analysis, and it is the relationship between the Mode of Production and the Super Structure of society. These “democratized” platforms mirror their centralized sisters, and are imbued with the very same capitalist values, in an environment that stands in conflict with those very same values. If this means of democratization of online community and communication was to be truly democratic, it would be a system that requires the least amount of technical knowledge and resources. However, those operators that sit at the top of each of these hosted systems exist higher on the class divide because they must operate a system designed to work at scale, with a network effect at the heart of its design. This is how you end up with the contradictions that lay under each of these systems. Mastodon.org is the most used instance, and its operators have a vested interest in maintaining that position, as it allows them and their organization to maintain control over the underlying structure of Mastodon. Matrix.org is the most used instance for its system for extremely similar reasons. Bluesky has structured itself in such a way that sits it on the central throne of its implementation. They have all obfuscated the centralization of power by covering their thrown with the cloak of “democratization”. Have these systems allowed the fostering of communities that otherwise drown in the sea of capitalist online social organizing? There is no doubt. Do they require significant organizational effort and resources to maintain? Absolutely. Are they still subject to a central, technocratic authority, driven by the same motivations as their sister systems? Yes, they are.

This brings me to AI, and it’s current implementation and design, and it’s underlying motivations and desires. These systems suffer from the same issues that this very platform suffer from, which is, that they are stained with the values of capital at their heart, and they are in no means a technology that is “neutral” in its design or its implementation. It is foolish to say that “Marxists have never opposed technological progress in principle”, in that this statement also handwaves away the critical view of technology in the Marxist tradition. Marx spends more than 150 pages—A tome in its own right—on the subject of technology and technological advancement under Capitalism in Volume 1 of Capital. Wherein he outlines how the worker becomes subjugated to the machine, and I find that this quote from Marx drives home my position, and I think the position of others regarding the use of AI in its current formation (emphasis mine).

The lightening of the labour, even, becomes a sort of torture, since the machine does not free the labourer from work, but deprives the work of all interest. Every kind of capitalist production, in so far as it is not only a labour-process, but also a process of creating surplus-value, has this in common, that it is not the workman that employs the instruments of labour, but the instruments of labour that employ the workman.

— Capital Volume 1, Production of Relative Surplus Value\Machinery and Modern Industry\Section 4: The Factory

What is it, at the core of both textual and graphical AI generation, that is being democratized? What has the capitalist sought to automate in its pursuit of Large Language Model research and development? It is the democratization of skill. It is the alienation of the Artist from the labor of producing art. As such, it does not matter that this technology has become “democratized” via open-source channels because at the heart of the technology, it’s intention and design, it’s implementation and commodification, lay the alienation of the artist from the process of creating art. It is not the “democratization” of “creativity”. There are scores of artists throughout our history whose art is regarded as creative despite its simplicity in both execution and level of required skill.

One such artist who comes to mind is Jackson Pollock, an artist who is synonymous with paint splattering and a major contributor to the abstract expressionist movement. His aesthetic has been described as a “joke” and void of “political, aesthetic, and moral” value, used as a means of denigrating the practice of producing art. Yet, it is like you describe in your own words, “Creativity is not an inherent quality of tools — it is the product of human intention”. One of the obvious things that these generative models exhibit is a clear and distinct lack of intention. I believe that this lack of “human intention” is explicitly what drives people’s repulsion from the end product of generative art. It also becomes “a sort of torture” under which the artist becomes employed by the machine. There are endless sources of artists whose roles as creators have been reduced to that of Generative Blemish Control Agents, cleaning up the odd, strange, and unintentioned aspects of the AI process.

Capitalist Mimicry and The Man In The Mirror

One thing often sighted as a mark in favor of AI is the emergence of Deepseek onto the market as a direct competitor to leading US-based AI Models. Its emergence was a massive and disruptive debut, slicing nearly $2-trillion in market cap off the US Tech Sector in a mater of days. This explosive out of the gate performance was not the result of any new ideologically driven reorientation in the nature and goal of generative AI modeling philosophy, but instead of the refinement of the training processes to meet the restrictive conditions created by embargos on western AI processing technology in China.

Deepseek has been hailed as what can be achieved under the “Socialist Model” of production, but I’m more willing to argue that this isn’t as true as we wish to believe. China is a vibrant and powerful market economy, one that is governed and controlled by a technocratic party who have a profound understanding of market forces. However, their market economy is not anymore or less susceptible to the whims of capital desires than any other market. One prime example recently was the speculative nature of their housing market, which the state is resolving through a slow deflation of the sector and seizure of assets, among other measures. I think it is safe to argue that much of the demands of the Chinese market economy are forged by the demands of external Capitalist desires. As the worlds forge, the heart of production in the global economy, their market must meet the demands of external capitalist forces. It should be remembered here, that the market economy of China operates within a cage, with no political influence on the state, but that does not make it immune to the demands and desires of Capitalists at the helm of states abroad.

Yes. Deepseek is a tool set released in an open-source way. Yes, Deepseek is a tool set that one can use at a much cheaper rate than competitors in the market, or roll your own hosting infrastructure for. However, what is the tool set exactly, what are its goals, who does it benefit, and who does it work against? The incredible innovation under the “Socialist model” still performs the same desired processes of alienation that capitalists in the west are searching for, just at a far cheaper cost. This demand is one of geopolitical economy, where using free trade principles, Deepseek intends to drive demand away from US-based solutions and into its coffers in China. The competition created by Deepseek has ignited several protectionist practices by the US to save its most important driver of growth in its economy, the tech sector. The new-found efficiency of Deepseek threatens not just the AI sector inside of tech, but the growing connective tissue sprung up around the sector. With the bloated and wasteful implementation of Open AI’s models, it gave rise to growing demand for power generation, data centers, and cooling solutions, all of which lost large when Deepseek arrived. So at its heart, it has not changed what AI does for people, only how expensive AI is for capitalists in year-to-year operations. What good is this open-source tool if what is being open sourced are the same demands and desires of the capitalist class?

Reflected in the production of Deepseek is the American Capitalist, they stand as the man in the mirror, and the market economy of China as doing what a market economy does: Compete for territory in hopes of driving out competition, to become a monopoly agent within the space. This monopolization process can still be something in which you distribute through an open-source means. Just as in my example above, of the social media platforms democratizing the social relations of capitalist communal spaces, so too is Deepseek democratizing the alienation of artists and writers from their labor.

They are not democratizing the process of Artists and Laborers training their own models to perform specific and desired repetitive tasks as part of their own labor process in any form. They hold all the keys because even though they were able to slice the head from the generative snake that is the US AI Market, it still cost them several million dollars to do so, and their clear goal is to replace that snake.

A Renaissance Man Made of Metal

Much in the same way that the peasants of the past lost access to the commons and were forced into the factories under this new, capitalist organization of the economy, the artist has been undergoing a similar process. However, instead of toiling away on their plots of land in common, giving up a tenth of their yield each year to their lord, and providing a sum of their hourly labor to work the fields at the manor, the Artist historically worked at the behest of a Patron. The high watermark for this organization of labor was the Renaissance period. Here, names we all know and recognize, such as da Vinci, Michelangelo, Raphael, and Botticelli were paid by their Patron Lords or at times the popes of Rome to hone their craft and in exchange paint great works for their benefactors.

As time passed, and the world industrialized, the system of Patronage faded and gave way to the Art Market, where artists could sell their creative output directly to galleries and individuals. With the rise of visual entertainment, and our modern entertainment industry, most artists’ primary income stems from the wage labor they provide to the corporation to which they are employed. They require significant training, years and decades of practice and development. The reproduction of their labor has always been a hard nut to crack, until very recently. Some advancements in mediums shifted the demand for different disciplines, 2D animators found themselves washed on the shores of the 3D landscape, wages and benefits depleted, back on the bottom rung learning a new craft after decades of momentum via unionization in the 2D space. The transition from 2D to 3D in animation is a good case study in the process of proletarianization, very akin to the drive to teach students to code decades later in a push for the STEM sector. Now, both of these sectors of laborers are under threat from the Metal Renaissance Man, who operates under the patronage of his corporate rulers, producing works at their whim, and at the whim of others, for a profit. This Mechanical Michelangelo has the potential to become the primary source of artistic and—in the case of code—logical expression, and the artists and coders who trained him become his subordinates. Cleaning up the mistakes, and hiding the rogue sixth finger and toe as needed.

Long gone are the days of Patronage, and soon too long gone will be the days of laboring for a wage to produce art. We have to, as revolutionary Marxists, recognize that this contradiction is one that presents to artists, as laborers, the end of their practice, not the beginning or enhancement of that practice. It is this mimicry that the current technological solutions participate in that strikes at the heart of the artists’ issue. Hired for their talent, then, used to train the machine with which they will be replaced, or reduced. Thus limiting the economic viability of the craft for a large portion of the artistic population. The only other avenue for sustainability is the Art Market, which has long been a trade backed by the laundering of dark money and the sound of a roulette wheel. A place where “meritocracy” rules with an iron fist. It is not enough for us to look at the mechanical productive force that generative AI represents, and brush it aside as simply the wheels of progress turning. To do so is to alienate a large section of the working class, a class whose industry constitutes the same percentage of GDP as sectors like Agriculture.

I have no issue with the underlying algorithm, the attention-based training, that sits at the center of this technology. It has done some incredible things for science, where a focused and specialized use of the technology is applied. Under an organization of the economy, void of capitalist desires and the aims to alienate workers from their labor, these algorithms could be utilized in many ways. Undoubtably, organizations of ones like the USSR’s Artist Unions would be central in the planning and development of such technological advancement of generative AI technology under Socialism. However, every attempt to restrict and manage the use of generative AI today, is simply an effort to prolong the full proletarianization process of the arts. Embracing it now only signals your alliance to that process.

If this means of democratization of online community and communication was to be truly democratic, it would be a system that requires the least amount of technical knowledge and resources.

That doesn’t really follow. Marxists acknowledge the necessity of division of labor and hierarchies. People with specific skills end up serving specific types of roles within the community. The notion of flat structures and direct democracy is an anarchist concept. Technically knowledgeable workers are still part of the working class, which is people who sell labour for a living. Their interests stand in direct opposition with the interests of capital owning class just as do those of all other workers.

This brings me to AI, and it’s current implementation and design, and it’s underlying motivations and desires. These systems suffer from the same issues that this very platform suffer from, which is, that they are stained with the values of capital at their heart, and they are in no means a technology that is “neutral” in its design or its implementation.**

This is a false premise because this technology exists outside capitalism. Community developed models or models developed in countries like China where different political and material conditions exist clearly fall outside capitalist framework. Given that this technology exists, and it’s being actively developed outside the west, I don’t really think this premise holds water.

It is the democratization of skill. It is the alienation of the Artist from the labor of producing art. As such, it does not matter that this technology has become “democratized” via open-source channels because at the heart of the technology, it’s intention and design, it’s implementation and commodification, lay the alienation of the artist from the process of creating art.

While Marx recognized how technology would be used to alienate workers within the capitalist framework, Marx never advocated against technological progress or automation. In fact, his whole argument is that the alienation of workers is precisely what sets the stage for socialism as capitalist system becomes increasingly unpalatable to the working majority.

China is a vibrant and powerful market economy, one that is governed and controlled by a technocratic party who have a profound understanding of market forces.

You’re coming dangerously close to making a fallacy of equating markets with capitalism here. Markets are not at odds with socialism in any way, nor is China even the first market driven socialist economy. I urge you to read up on Hungary and Yugoslavia as prior examples.

It should be remembered here, that the market economy of China operates within a cage, with no political influence on the state, but that does not make it immune to the demands and desires of Capitalists at the helm of states abroad.

This is a hand wavy statement that does not directly support your assertion regarding DeepSeek. You would have to explicitly establish the mechanism that you believe affects the way DeepSeek is subverted by external capitalist forces.

So at its heart, it has not changed what AI does for people, only how expensive AI is for capitalists in year-to-year operations. What good is this open-source tool if what is being open sourced are the same demands and desires of the capitalist class?

You’re once again using a false premise here. The obvious answer is that lower power cost makes this tool available to regular people. It wrests control of the tools out of the hands of capitalists and makes it possible for everyone to use them in any way they see fit. Another way to put it, is that it puts the means of production in the hands of the workers.

They are not democratizing the process of Artists and Laborers training their own models to perform specific and desired repetitive tasks as part of their own labor process in any form.

That’s literally what’s happening. AI tools like ComfyUI are already being used by artists to collaborate and bring their visions to life, particularly for smaller studios. These tools streamline the workflow, allowing for a faster transition from the initial sketch to a polished final product. They also facilitate an iterative and dynamic creative process, encouraging experimentation and leading to unexpected, innovative results. Far from replacing artists, AI expands their creative potential, enabling smaller teams to tackle more ambitious projects.

However, every attempt to restrict and manage the use of generative AI today, is simply an effort to prolong the full proletarianization process of the arts. Embracing it now only signals your alliance to that process.

It’s simply delaying the inevitable without actually doing anything to improve the situation in the long run. The AI boycott movement is peak liberal idealism. It’s the political equivalent of shaking a fist at the sky. Unhappy with the tech? Can’t imagine real action? Just vote it away! As if moral posturing ever stopped a single corporation.

Real action involves building instead of pleading. Open development where artists, engineers, and communities co-create tools is the only path to wrest control from Silicon Valley’s black boxes. You don’t vote out exploitation, you have to put in work to replace it. My whole argument in the original post is that the way forward is to build tools outside capitalist framework, instead of ceding control to corporations which is what rejecting the use of this tech will ultimately accomplish.

Thanks for your patience and diligence. These vibes-based-luddite posts/comments were initially surprising to see on an ML thread but now it just feels pathetic, there’s only so many ways one can explain this stuff; it’s clear that artisans are having reactionary takes as they fear proletarianization.

(I really don’t understand why this is so hard to understand; it’s such a basic aspect of marxism and capital that the more I see this stuff on lemmygrad the more I feel like I am outgrowing lemmygrad from an ML perpective. Honestly your posts are like 90% why I am still hanging around.)

Thanks, I think helping with education on these fundamental issues is essential. If even the ML community here cannot establish a coherent and principled analysis of such topics, it spells trouble for Marxists in the West as a whole. In my view, Lemmygrad has significant potential as an educational platform, given that most participants here already possess at least a foundational grasp of Marxist theory. Those of us with deeper expertise should take responsibility for guiding others to advance their understanding in turn. It’s the only way we can grow.

I’ve been following both posts and looking at this stuff from a lot of people. Some IRL communist friends as well. I always have these two gripes (or three, depending on how it’s counted)

-

Art is already (profoundly) commodified: Look at the whole Hollywood complex. And, even if you want to be super specific, at animation. Human hand drawn art is already commodified. Look at Magic: the Gathering art. Look at any piece of drawn advertising. I’m not even bringing up photography, photo editing, filmography, 3D modeling, acting and such to the loop. I’ve read in another comment that the OP has more of a “it’s about the sincerity of the art”, like, we know that corporate art is (to some degree) soulless regardless of human intent or not. So, automating it would be a good way to take away this burnout-inducing lack of the artist’s will. (This last part is written in naïvety, just to bridge to this second part)

-

Most arguments liberal artists use are more like labor aristocracy than anything: From what I usually get, at least from my reality and part of the world, people who graduate in or study arts are, usually, people with so much money that they can afford to not ever work to sustain themselves, or are from a proletariat class that is either looking for some economic ascension, but is lost in some romantic thought about the artistic profession. And, again, using my own experience, those are people that like to entrench themselves in such arguments. So, I go with the thought process of the textile industry during the industrial revolution

The final gripe is just a pedantism: please, let’s call it image generation. We use AI all the time. Not for LLMs only, but for disease diagnosis, image recognition, text recognition, audio noise suppression, traffic control and so much more. People usually throw the baby out with the bathwater, but it just shows their complete ignorance on the matter.

Those are excellent points. I completely agree that the liberal worldview dominates Western thought, even within leftist circles, and this inevitably leads to reactionary analyses that offer no real solutions. You’re also right that we should clarify what kind of AI systems we’re discussing, since many aren’t even LLMs. Symbolic logic and other AI methods exist, but LLMs are just the latest hype cycle soaking up all the attention.

-

You are right but as a westerner I am being increasingly disillusioned by other westerners; our class on the world stage slows down our progression. My frustration is probably projection on my part as a labor aristocrat with conflicting priorities. I suspect despite a lack of coherent theory a lot of comrades here on these forums are probably doing significantly more than I am IRL. (Heck I wanted to do a deep dive article into nations/genders as effective classes but it has been ages and I still have not found the time to do it.)

I have been low key learning Mandarin for the past two years because I can’t see anything good happening in the west going forward, so I can definitely relate to the disillusionment. It is frustrating to live in a society where majority of people have a different world model from you, and problems that are blindingly obvious to you are treated as if nobody understands why they’re happening and what the solutions are.

Marx never advocated against technological progress or automation

Fun fact, Bernstein did so, bastardising Marx.

lol reactionary thought lives on :)

I found a YouTube link in your comment. Here are links to the same video on alternative frontends that protect your privacy:

(1) Marxists are pro-centralization, not decentralization. We’re not anarchists/libertarians. This is good for us as it lays the foundations for socialist society, while also increasing the contradictions within capitalist society, bringing the socialist revolution closer to fruition.

This centralist tendency of capitalistic development is one of the main bases of the future socialist system, because through the highest concentration of production and exchange, the ground is prepared for a socialized economy conducted on a world-wide scale according to a uniform plan. On the other hand, only through consolidating and centralizing both the state power and the working class as a militant force does it eventually become possible for the proletariat to grasp the state power in order to introduce the dictatorship of the proletariat, a socialist revolution.

— Rosa Luxemburg, On the National Question

Communist society is stateless. But if true - and most certainly it is - what really is the difference between anarchists and Marxist communists? Does this difference no longer exist, at least on the question of the future society and the “ultimate goal”? Of course it exists, but is altogether different. It can be briefly defined as the difference between large centralized production and small decentralized production. We communists on the other hand believe that the future society…is large-scale centralized, organized and planned production, tending towards the organization of the entire world economy…Future society will not be born of “nothing”, will not be delivered from the sky by a stork. It grows within the old world and the relationships created by the giant machinery of financial capital. It is clear that the future development of productive forces (any future society is only viable and possible if it develops the productive forces of the already outdated society) can only be achieved by continuing the tendency towards the centralization of the production process, and the improved organization of the “direction of things” replacing the former “direction of men”.

— Nikolai Bukharin, Anarchy and Scientific Communism

The advance of industry, whose involuntary promoter is the bourgeoisie, replaces the isolation of the labourers, due to competition, by the revolutionary combination, due to association. The development of Modern Industry, therefore, cuts from under its feet the very foundation on which the bourgeoisie produces and appropriates products. What the bourgeoisie therefore produces, above all, are its own grave-diggers.

— Marx & Engels, Manifesto of the Communist Party

(2) Much of your discussion just regards how AI is turning artists into an “extension of the machine” and further alienating their labor. But, like, that’s already true for most workers. Petty bourgeois artists will have to fall to the low, low place of the common working man… gasp! The reality is that it is good for us, because a lot of these petty bourgeois artists, precisely because they are “self-made” and not as alienated from their labor as regular workers, tend to have more positive views of property right laws. If more of them become “extensions of the machine” like every proles, then their interests will become more materially aligned with the proles. They would stop seeing art as a superior kind of labor that makes them better and more important than other workers, but would see themselves as equal with the working class and having interests aligned with them.

(3) Your discussion regarding Deepseek is confusing. Yes, the point of AI is to improve productivity, but this is an objectively positive thing and the driving force of history that all Marxists should support. The whole point of revolution is that the previous system becomes a fetter on improving productivity. Whether or not Deepseek was created to improve productivity for capitalist or socialist reasons, either way, improving productivity is a positive thing. It is good to reduce labor costs.

[I]t is only possible to achieve real liberation in the real world and by employing real means, that slavery cannot be abolished without the steam-engine and the mule and spinning-jenny, serfdom cannot be abolished without improved agriculture, and that, in general, people cannot be liberated as long as they are unable to obtain food and drink, housing and clothing in adequate quality and quantity. “Liberation” is an historical and not a mental act, and it is brought about by historical conditions, the development of industry, commerce, agriculture, the conditions of intercourse.

— Marx, Critique of the German Ideology

The proletariat will use its political supremacy to wrest, by degrees, all capital from the bourgeoisie, to centralise all instruments of production in the hands of the State, i.e., of the proletariat organised as the ruling class; and to increase the total of productive forces as rapidly as possible.

— Marx & Engels, Manifesto of the Communist Party

(4) Clearly, for the proletariat, we “full proletarianization of the arts” is by definition a good thing for the proletarian movement.

(1) Marxists are pro-centralization, not decentralization. We’re not anarchists/libertarians. This is good for us as it lays the foundations for socialist society, while also increasing the contradictions within capitalist society, bringing the socialist revolution closer to fruition.

OP is not advocating for decentralization, this is a misrepresentation of their point. My understanding is that he was talking about how decentralized social media also ends up centralized, and provided examples of that. I don’t think this point has much to do with AI, tho.

(2) Much of your discussion just regards how AI is turning artists into an “extension of the machine” and further alienating their labor. But, like, that’s already true for most workers. Petty bourgeois artists will have to fall to the low, low place of the common working man… gasp! The reality is that it is good for us, because a lot of these petty bourgeois artists, precisely because they are “self-made” and not as alienated from their labor as regular workers, tend to have more positive views of property right laws. If more of them become “extensions of the machine” like every proles, then their interests will become more materially aligned with the proles. They would stop seeing art as a superior kind of labor that makes them better and more important than other workers, but would see themselves as equal with the working class and having interests aligned with them.

This is a completely US/Euro-centric view of what artists are and it’s fucked up to say. We should not be celebrating more workers getting the short end of the stick, we should be showing them solidarity and showing them the way to organization. Antagonizing them just because you think they are petite-bourgeois is completely counterproductive. Most artists are either just making ends meet or working for big companies like every other worker, only a rather small minority of artists would fit the petty-bourgeois label.

(4) Clearly, for the proletariat, we “full proletarianization of the arts” is by definition a good thing for the proletarian movement.

This doesn’t mean they will suddenly develop class consciousness. They were never a part of the bourgeoisie to begin with, and therefore our interests were already aligned.

This is a completely US/Euro-centric view of what artists are and it’s fucked up to say. We should not be celebrating more workers getting the short end of the stick, we should be showing them solidarity and showing them the way to organization.

Are artists who work for themselves something that only occurs in the US and Europe? I guess I just live under a rock, genuinely did not know.

Antagonizing them just because you think they are petite-bourgeois is completely counterproductive. Most artists are either just making ends meet

I don’t know what “antagonizing” has to do with anything here, and if you work for yourself you are by definition petty-bourgeois. How successful you are at that isn’t relevant. The point is not about moralizing, I get the impression when you talk about “antagonizing” you are moralizing these terms and acting like “petty-bourgeoisie” is an insult. It’s not. Many members of the petty-bourgeoisie are genuinely good people just trying to make their way in the world. It’s not a moral category.

I am talking about their material interests. A person who works for themselves isn’t as alienated from their labor as someone who works for a big company, and this leads them to also value property rights more because they have more control over what they produce and what is done with what they produce.

or working for big companies like every other worker

If you really are working for a big company where, like all regular workers, you don’t get much say in what you produce or any control over it in the first place, then yes, your position is more inline with a member of the proletariat already, but a person like that would also be more easy to appeal to. They wouldn’t have as much material interests in protecting intellectual property right laws because they are already alienated from what they produce.

In my personal experience (I have no data on this so take it with a grain of salt), petty-bourgeois artists tend to be more difficult to appeal to because even in the cases where they have left-leaning tendencies, they tend to lean more towards things like anarchism where they believe they can still operate as a petty-bourgeois small producer. I remember one anarchist artist who even told me that they would still want community enforcement of copyright under an anarchist society because they were afraid of people copying their art.

Maybe you are right and I am just sheltered and most artists outside of US and Europe work for big companies and the kind of “self-made” artist is more of a western-centric thing. But if that’s the case, you can consider the commentary to be more focused on the west, because it still is worth discussing even if it’s not universally applicable.

This doesn’t mean they will suddenly develop class consciousness.

Of course, people only develop at best union consciousness on their own. You are already seeing increased unionization and union activities from artists in response to AI. For class consciousness, people need to be educated.

They were never a part of the bourgeoisie to begin with, and therefore our interests were already aligned.

Many, at least here in the west where I live, are petty-bourgeois. Not all, but the “self-made” ones tend to be the most vocal against things like AI and they care the most about protecting things like copyright and IP law. If you’re working for a big company, the stuff you draw belongs to the company, and even if it didn’t, it would still have no utility to yourself because it’s designed specifically to be used in company materials, so not only do these property right laws not allow you to keep what you draw, but even if they were removed, you wouldn’t want to keep it, either, because it has no use to you.

That is why the proletariat is more alienated from their labor, and why they have less material interests in trying to maintain these kinds of property right laws. Of course, that doesn’t mean a person of the petty-bourgeois class can’t be appealed to, but it is a bit harder. In the Manifesto, Marx and Engels argue they can be appealed to in the case where they view their ruination and transformation into a member of the proletariat as far more likely than ever succeeding and advancing to become a member of the bourgeois class.

But Marx and Engels also argue that they are typically reactionary because they want to hold back the natural development of the productive forces, such as automation, precisely because it will lead to most of their ruination. This is the major problem with a lot of petty-bourgeois artists, they want to hold back automation in terms of AI because they are afraid it will hurl them into the proletariat. However, as automation continues to progress, eventually it will have gone so far it’s clear there is no going back and they will have to come to grips with this fact, and that’s when they proletariat can start appealing to them.

It was the same thing that Engels recommended to the peasantry. The ruination of the peasantry, like the petty-bourgeoisie, is inevitable with the development of the forces of production, specifically with the development of new productive forces that massively automate and semi-automate many aspects of agriculture. So, the proletariat should never promise to the peasantry to preserve their way of life forever, but rather, they should only promise to the peasantry better conditions during this process of being transformed into members of the proletariat, i.e. Engels specifically argued that collectivizing the peasant farms would allow them to develop into farming enterprises in a way that saves the peasants from losing their farms, which the majority would under the normal course of development.

Similarly, we should not promise to any petty bourgeois worker that we are going to hold back or even ban the development of the forces of production to preserve their way of life, but only that a socialist revolution would provide them better conditions in this transformation process. Yes, as you said, many of these artists are “just making ends meet,” and that’s the normal state of affairs. The petty-bourgeoisie are called petty for a reason, they are not your rich billionaires, most in general are struggling.

As for petty-bourgeois artists, if we simply banned AI, their life would still be shit, because we would just be stopping the development of the productive forces to preserve their already shitty way of life. In a socialist state, however, they would be provided for much more adequately, and so even though they would have to work in a public enterprise and could no longer be a member of the petty-bourgeoisie, they would actually have a much higher and more stable quality of living than “just making ends meet.” They would have financial security and stability, and more access to education and free time to pursue artistry that isn’t tied to making a living.

Marxists should not be in the business of trying to stall the progress of history to save non-proletarian classes, and the artists who work for big corporations who don’t own their art are already proletarianized, so the development of AI doesn’t change much for them.

Are artists who work for themselves something that only occurs in the US and Europe? I guess I just live under a rock, genuinely did not know.

Of course not and it’s not something I said didn’t exist, but rather not a majority like you implied it is, at least not from my experience observing it.

I don’t know what “antagonizing” has to do with anything here, and if you work for yourself you are by definition petty-bourgeois. How successful you are at that isn’t relevant. The point is not about moralizing, I get the impression when you talk about “antagonizing” you are moralizing these terms and acting like “petty-bourgeoisie” is an insult. It’s not. Many members of the petty-bourgeoisie are genuinely good people just trying to make their way in the world. It’s not a moral category.

I didn’t mean antagonizing in a moralistic way. Yes your definition is correct on paper, but I responded the way I did because of the way you initially talked about artists with contempt. I get what you were trying to say now, but saying it that way is not gonna win artists’ hearts even if they do become as a whole extremely proletarianized.

In my personal experience (I have no data on this so take it with a grain of salt), petty-bourgeois artists tend to be more difficult to appeal to because even in the cases where they have left-leaning tendencies, they tend to lean more towards things like anarchism where they believe they can still operate as a petty-bourgeois small producer.

I get what you’re saying, I have seen this type of behavior before, mainly from english-speaking artists, but that is not my experience with artists from outside the imperial core. Here in Brasil at least, I tend to see a lot of left-leaning artists that understands their place in class society and class struggle. Maybe it’s something more specific to my country, I don’t know.

For the rest of your comment I completely agree. Also, just to be clear I’m not on the side of banning AI or promising artists things are gonna stay the way they are, but rather on the side of advocating for their organization and education on class dynamics and struggle. In fact there are already examples of artists organizing here in Brasil, like the movement UNIDAD.

Marxists are pro-centralization, not decentralization. We’re not anarchists/libertarians.

I think we should strive to develop new marxist models of decentralized systems; because a lot of this centralized theory predates widespread use of electricity let alone modern technology and vast advances that have been made in science.

This is how marxism survives and strives; not by being book/model dogma worshippers.

I think we should strive to develop new marxist models of decentralized systems

I don’t think Marxists, or materialists in general should be concerned about “Centralization” vs “Decentralization” at all. The problem classes that technology solves and solution methods available are numerous, and it is very reductive to lump them into “centralized” or “decentralized” categories. It is an unscientific approach no less bizarre than arguing about whether or not Lebesgue integrals or Riemann sums are more Marxist.

The centralization of production is the material foundations for socialist society and the core of Marx’s historical materialist argument as to why capitalism is not an eternally sustainable system. If you abandon it then Marxism might as well be thrown in the trash because it would no longer have a materialist argument against capitalism. You could only mount a moralist argument at that point. Unless you are arguing that there is a different historical materialist argument possible that you could make that doesn’t rely on appealing to the laws of the centralization?

Unless you are arguing that there is a different historical materialist argument possible that you could make that doesn’t rely on appealing to the laws of the centralization?

Kind of a late reply, but yeah. There are actually many phenomena simultaneously ongoing these days that place a hard limit on the perpetuation of capitalist society, which kind of makes it strange to me that some Marxists are arguing about centralization (as if monopoly capitalism hasn’t been around for a 100 years now).

- Capitalist countries are basically unable to manage the climate or ecological crisis

- As capitalist countries develop their birth rates fall

- Falling birthrates and falling rates of productivity growth place downwards pressure on profits, which the capitalists are failing to restore

- The shots in the arm that the capitalist-imperialist countries got from all their imperial plunders are fading in effect over time

- De-industrialization has weakened the capitalist-imperialist countries’s ability to maintain control over the globe

- The global surplus fund of labor is in general, declining (relatively).

The development process of capitalism does not so much as produce “centralisation” (which is ill defined tbh) but socialisation (the conversion of individual labor to group labor), urbanisation and standardisation.

And while someone might quibble over the idea that “centralisation = socialisation”, people in general have all sorts of ideas about what centralisation is, while “socialisation” itself retains a solid definition.

Furthermore, while it is true that socialist society develops out of capitalist society, revolutions are by definition a breaking point in the mode of production which makes the insistence that socialist societies must be highly centralised backwards logic. We are starting from a dislike of anarchism’s dogma of decentralisation and just working backwards.

Not that I necessarily opposed having a highly “centralised” socialist society, but that would be a very reductive way of classifying a mode of production given what we know about networks and production cycles in modern theories.

The development process of capitalism does not so much as produce “centralisation” (which is ill defined tbh) but socialisation (the conversion of individual labor to group labor), urbanisation and standardisation.

This is just being a pedant. Just about every Marxist author uses the two interchangeably. We are talking about the whole economy coming under a single common enterprise that operates according to a common plan, and the process of centralization/socialization/consolidation/etc is the gradual transition from scattered and isolated enterprises to larger and larger consolidated enterprises, from small producers to big oligopolies to eventually monopolies.

Furthermore, while it is true that socialist society develops out of capitalist society, revolutions are by definition a breaking point in the mode of production which makes the insistence that socialist societies must be highly centralised backwards logic.

Marxism is not about completely destroying the old society and building a new one from the void left behind. Humans do not have the “free will” to build any kind of society they want. Marxists view the on-the-ground organization of production as determined by the forces of production themselves, not through politics or economic policies. When the feudal system was overthrown in French Revolution, it was not as if the French people just decided to then transition from total feudalism to total capitalism. Feudalism at that point basically didn’t even exist anymore, the industrial revolution had so drastically changed the conditions on the ground that it basically already capitalism and entirely disconnected from the feudal superstructure.

Marx compared it to how when the firearm was invented, battle tactics had to change, because you could not use the same organizational structure with the invention of new tools. Engels once compared it to Darwinian evolution but for the social sciences, not because of the natural selection part, but the gradual change part. The political system is always implemented to reflect an already-existing way of producing things that arose on the ground of its own accord, but as the forces of production develop, the conditions on the ground very gradually change in subtle ways, and after hundreds of years, they will eventually become incredibly disconnected from the political superstructure, leading to instability.

Marx’s argument for socialism is not a moralistic one, it is precisely that centralized production is incompatible with individual ownership, and that the development of the forces of production, very slowly but surely, replaces individual production with centralized production, destroying the foundations of capitalism in the process and developing towards a society that is entirely incompatible with the capitalist superstructure, leading to social and economic instability, with the only way out replacing individual appropriation with socialized appropriation through the expropriation of those enterprises.

The foundations remain the same, the superstructure on top of those foundations change. The idea that the forces of production leads directly to centralization and that post-capitalist society doesn’t have to be centralized is straight-up anti-Marxist idealism. You are just not a Marxist, and that’s fine, if you are an anarchist just be an anarchist and say you are one and don’t try to misrepresent Marxian theory.

We are starting from a dislike of anarchism’s dogma of decentralisation and just working backwards.

Oh wow, all of Marxism is apparently just anarchist hate! Who knew! Marxism debunked! No, it’s because Marxists are just like you: they don’t believe the development of the past society lays the foundations for the future society, they are not historical materialists, but believe humanity has the free will to build whatever society they want, and so they want to destroy the old society completely rather than sublating it, and build a new society out of the ashes left behind. They dream of taking all the large centralized enterprises and “busting them up” so to speak.

We are talking about the whole economy coming under a single common enterprise that operates according to a common plan

The details of what this common plan look like and how different sectors act cannot be proclaimed in advance. It is not pedantic to make a difference between socialisation and centralisation.

An enterprise can be highly socialised (a common budget and plan for all departments) while different departments are given significant amounts of autonomy (thereby being relatively decentralised). How this decision making is carried out, at what level, how many levels there even are in the hierarchy are important questions. And the answer to all of these questions differs depending on the purpose of the enterprise, even under a highly developed Communism.

That’s why it is reductive to proclaim in advance that communist society will be extremely centralised.

Marxism is not about completely destroying the old society and building a new one from the void left behind. Humans do not have the “free will” to build any kind of society they want.

Correct

Marxists view the on-the-ground organization of production as determined by the forces of production themselves, not through politics or economic policies.

The relationship between the base and superstructure is not hyper-deterministic. The base reassert itself during revolutionary conjectures, but if the base was always fully determining the superstructure and the development of the base occurred automatically, there literally wouldn’t even be a point to engaging in communist politics. At that point, communists would be able to simply sit back, relax and watch Communism appear in “due time”.

Furthermore, the very existence of a common plan for organising society itself presupppses that the superstructure assert itself over the base.

Feudalism at that point basically didn’t even exist anymore

On the contrary the natural peasant economy still existed in france for many years after the French revolution, and attempted reassertions of feudal relations and feudal superstructures in france continued for many decades after the revolution.

You are just not a Marxist, and that’s fine, if you are an anarchist just be an anarchist and say you are one and don’t try to misrepresent Marxian theory.

I’ve never been accused of being an anarchist before. I guess there is a first time for everything.

It is also somehow idealism for one to expect future communist societies who can plan production for the whole society to be unable to organise themselves more intelligently than obsessing over some ill defined metric of “centralisation” and chain themselves to it.

Oh wow, all of Marxism is apparently just anarchist hate

I would like to see how this snark squares with literally anything I have said in my comment or even posting history.

They dream of taking all the large centralized enterprises and “busting them up” so to speak.

I think it’s amazing that you completely missed the part of my comments where I made sure to specify that “decentralisation” is also a nonsense category and not something to strive towards.

You seemed to have also forgotten while writing your comment that you labeled me as a pedant for basically wanting centralisation but calling it socialisation.

I also seem to have missed the part of marx where he said “historical materialism is when you take the current trend of society and draw a straight line to extrapolate”.

No, a revolution in the forces of production is a phase change. It does not occur instantaneously but the whole point is that the megatrend of developmental progress has been fundamentally altered.

I think that this machine learning stuff is just revealing the role of illustrators/writers/musicians in our society: slop factories. They’re here to churn out the latest cash-grab sequel movies and generic Billboard hot 100 songs. Whatever you define “art” as, it’s probably not what these industries are pumping out even with human talent.

But that’s not the fault of the code because it’s been like this since forever. Besides, shouldn’t it come as a relief that this uninspired, unfulfilling work is becoming automated? The amount of support I’m seeing online about these infamously soul-crushing jobs is a little weird. I mean, I know unemployment is worse but people are getting all RETVRNy about grinding at their poorly paid creative jobs. Surely we can imagine a future better than wage slaving for suits, right? Beyond the commodification of all human expression? Maybe not.

The more I read about this whole llm thing, the more I hate “artists”. Why can picture drawers be so pretentious to call everything they make “art”?

I draw pictures as a hobby and they’re not art, they’re just pictures.

The “art market” is NFTs before blockchain. If those rich dumbasses really liked the pictures so much, they’d take a photo, or a copy. But no, they need the “original” because they think it will go up in “value” (sell to bagholder).

Yeah, english definition of the word “art” is bound to create a resistance to it, that’s why words like “daub” or “slop” keeps emerging.

So what is the definition of art then? At what point can a doodle be considered art?

Art is to a person what is considered art by that person, its personal

Seems like i’m getting tripped over by a language barrier here, it seems like the word for art in my lang might mean something different than the english one

Edit: another thing I noticed is that my brain, for some reason, only says “art” when looking at a collage, as in “collage=art, no collage=not art”. No idea why…

I draw pictures as a hobby as well. However, I understand that the vast majority of “art” is created by wage laborers, the majority of which fall into the entertainment and games industries, industries that are both notorious for over working their laborers. I’m sorry that you seem to have this growing “hate” for a subsection of the working class, it doesn’t seem very comradely of you if I’m being honest. These “picture drawers” are who exactly? The ones you diminish with your tone and words work longer hours under more stressful conditions than myself as a hobbyist artist. It is funny that Yog uses photography as his primary example in his essay because photography is a field of creative work that only exists as a result of technological advancements. The camera does not alienate the artist from the process of creating art, it created the artist. It is, in its self, neutral in all regards to the artist because without it there would be no photography. It stands in stark and blazing contrast to that of textual and graphical AI “art”, in the sense that, if generative text and graphic AI tools were to vanish tomorrow, there would still be graphical art being produced, and there would still be literature being written.

Somehow, I am not shocked to see this opinion from a Lemmygrad user. As of late, I have noticed a growing acceptance of the weapon of alienation against the wage labor artist. That is the chief complaint here, isn’t it? That this “tool” is not one of neutrality, unlike many of the other advancements in both entertainment and games. Generative AI produces end products because they are trained on end products, this is precisely how the tool reduces the artists’ role to that of a subordinate to it. Sure, as Yog points out, there are people doing novel things with AI as part of a bespoke workflow, but that is not what the vast majority of capitalists desire when looking at these tools. They want to cut out graphical designers, stock photography licenses, and low-level artists. They intend to contract an artist for a set amount of time, and then train an AI model on their limited labor, and then never hire them again.

Hobbyists like myself, yourself, and like Yog have no skin in the game with this argument, except as a unified class in a struggle against capitalist desires. The line is drawn there. I have the luxury of producing my own drawings in what limited leisure time I have. Ironically, artists in the creative fields, looking to work for a wage or a commission, to sustain their living conditions, produce more art in service of reproducing their own labor, than art for the sake of creating art. In that way, I have more creative freedom than that of an artist who works in the field for a living.

So again, I’m sorry that you’ve found yourself on the side of capital in this regard. Maybe you’ll come to see that to be true in the future. I do not suspect that I’ll have changed your position with a few paragraphs of text.

I think the anti- “anti ai artist” sentiment at least online is (or rather should?) be more directed towards the semi-hobbyist to small-business-petit-bourgeois types that pitch their weight with our class enemies, eg will side with stricter intellectual property laws to the point of agreeing that “art style” should be included in trademark/ip law. They also happen to be the loudest crowd when it comes to anti-ai art noise, to the point of also attacking and brigading professional artists who use ai tools. In my observation, the “small business owner” type artists are the most resistant to being proletarianized and act accordingly; in any case, many wage laborer artists also have self-biz hustles like online stores for periodic sketchbook/artbooks/prints (I point this out in a neutral manner not to detract from their primary[assuming] wage income but to color the discussion of petit bourgeois aspirations among the “[digital] artist” community).

I have seen efforts to “convert”/“deprogram” parts of the artist community away from supporting harsher ip laws and explain why Disney or record companies etc benefit the most and supporting harsher ip/etc does not benefit them, and in fact works to empower exploitative schemes of capitalists, but these efforts have a harder time gaining foothold amongst the typical anti-ai/ai art black-and-white views entrenched in the community, especially as call-out and cancellation brigades roll out regularly in those circles.

edit: I dunno if yall were around from deviantart to [idk what platforms certain art communities use anymore][idk artstation?] era but the absolute EASE that online “artists” throw the word “steal/theft” to apply to whatever they didn’t like (petit capitalist mindset where “inspiration” becomes “mimickery” becomes “copying” becomes “stealing” eg “actually deprived me of money”) has been around for decades at this point. I think there are still circles who treat tracing(for practice) and even referencing as untouchable/sacrilege.

I think artists have been placed in that position through decades of combat with capitalists over their costly labor reproduction. Instead of having an artist in house, you contract the artist out through commission work. You no longer need to provide them benefits or wages, and you can discard them when they’re done. They are gig laborers. They stand to lose just as much and be alienated in similar ways as the wage laborer found in other production houses.

You are correct in the assessment that this only drives them to pursue stricter property laws, but that is the natural outgrowth of the system of capitalism. In fact, it is a deep and cutting contradiction. IP laws and copyright laws exist to protect the holder of created assets, but in a world where corporations can also be the holders of creative assets, it pits the demands and desires of the individual artist against the capitalist monopoly on creative expression. They end up supporting laws that further entrench the interests of capital, a blade which will soon be turned back on the individual artist, cutting them where they stand. That is the fate of the petite bourgeois: They are either kicked into the mass grave dug by the capitalist, or they dig the capitalists grave.

These are precisely the people who need to be agitated on the side of the working class, and using AI generative tools to do so will not be a successful path.

I didn’t say that “we should agitate people with petit bourgeois aspirations to side with the working class [their real class] via gen ai tools”. I guess I got ahead of myself when I said there already have been efforts to convince artists that gen ai or tech in general isn’t their enemy, corporate monopolization etc of tech however is because capitalists are;; (eg yes there should be said efforts, maybe better/more efforts in that regard. but anti-ai is very entrenched at this point)

However I did point out that these people are, influenced by a combination of (self-circulated and corporate) propaganda and their own petit bourgeois aspiring class interest (resisting acceptance of proletarian status), pitching their weight with our (and their own) class enemies; with gen ai/ai art as the driving wedge at this present moment but any new tech that reshapes the material landscape would be treated similarly – my comment isn’t about ai in particular, rather it’s more about the art community in question.

Or, maybe I wasn’t clear enough? Anti-ai art frenzy in the artist community is being funnelled towards support for harsher ip and trademark laws, by companies who would actually still be using gen ai but want to hoard and control (and expand) “their” datasets. Yes more people on the side of the working class is good but class traitors exist and if these people, after explaining that technology itself isn’t their enemy but capitalists are, still want to be willing pawns then, well, for on,e I have better uses for my time and energy, and yeah I’d agree with m532 they’re “dumbasses”

case in point: this is a project borne of the anti-ai craze: unvale.io ; here’s a tumblr post they circulated (since there’s a sizeable art/artist community on tumblr who are anti-ai) but digging into the replies, people are fully disambiguating the draconian, PG, family friendly TOS and pointing out that posting to their platform makes an oddly convenient place to harvest virgin (un-touched by gen ai tools) and corporate-friendly data. Not everyone looks through the notes however and plenty of people are still reblogging just the first post and “spreading word about an awesome new no-ai platform!!”. Also, lol @ #supportourtroops shit :: no oct 7 or 9/11

*outside of this direct discussion there’s also extreme ableism among the artist anti-ai crowds as well.

In my view, the ultimate contribution of generative AI is the ability to use already developed knowledge. Most of artists work is not novel, but to use already developed styles and techniques to make something that meet their client request. Same for coding, a web developer rarely develops a custom component to build their clients app, AI makes these processes very straightforward and could liberate people from doing this boring work.

What gen AI simply can’t do, and is the reason why people should embrace this tool, is create novel stuff, it cannot create an unique artstyle (well other than the AI slop artstyle) nor it can develop a faster sorting algorithm. With good gen AI people will no longer have to figure out how to reinvent the wheel and can spend more time doing the fun stuff, creating and experimenting novel stuff.

I haven’t read either yet, as I haven’t had much time, but as a methodological side note I just want to say I really enjoy this “tribune” style of usage of the forum. Having well thought out polemics is part of the development of a correct line.

I’ll try to read them both carefully later, but right now I just want to compliment the effort put forward by both users.

One of the obvious things that these generative models exhibit is a clear and distinct lack of intention. I believe that this lack of “human intention” is explicitly what drives people’s repulsion from the end product of generative art. It also becomes “a sort of torture” under which the artist becomes employed by the machine. There are endless sources of artists whose roles as creators have been reduced to that of Generative Blemish Control Agents, cleaning up the odd, strange, and unintentioned aspects of the AI process.

This is a fascinating way of looking at it, and one that I often struggled to put into words before. “Intentionality” is something you don’t consciously associate with a piece of art, but when you think about it, human artists are in full control, and every detail and decision that is made in their art was made at their discretion.

Indeed, the very knowledge that something was generated by a machine immediately also informs you that what you’re looking at was deemed good enough by the human patron of the machine, and not a deliberate, intentional production where every stroke and detail was placed there with purpose and artistic vision. It is a very comparable situation to when someone presents you with a piece of art, and they tell you that they created it themselves, versus when they present a piece of art that they commissioned someone else to create for them. In both cases, the final product fits their specifications and expresses precisely what they wish it to, but in one case, there is no doubt that each and every facet is made in service to that intention, rather than the best interpretation that a third party could come up with to match it as best as it could.

Art can have merit regardless of who makes it, machine, artist, or commissioned work at the behest of a patron. I do not think anyone can really disagree with this. But the difference lies in how we perceive the sincerity of something that comes entirely from the mind and work of human artists with a message, rather than from a machine that is fed instructions, or even an artist who is handed their specifications by someone else.

I think anyone can see even here on lemmy, that A.I agitprop is very frequently met with disdain from some, despite most agreeing with the message and the cause, and I think this is a large part of why.

I think you’ve really picked up on my train of thinking, this is precisely how I feel as well.

And in turn you’ve expressed in a very thought provoking and analytical way the things my own reptile brain and tenuous grasp of English have made me feel uncertain to say myself. Great write-up, friend.

Thanks comrade!

Thanks comrade!

Deepseek democratizing the alienation of artists and writers from their labor.

I don’t think you’ve sufficiently substantiated this claim. I don’t think any laborers in the tech industry believe this.

Tech workers don’t need to train Deepseek themselves to derive value from Deepseek in the same way that tech workers don’t need to build Linux or VS Code themselves to derive value from those tools. Nothing is completely open of course. Linux development is mostly driven by for profit companies and VS Code is wholly owned by one but they both still benefit everyone and make the ecosystem more accessible.

I think we need to separate out the various sectors this “tool set” impacts. Because in my statement, I’m explicitly talking about Artists and Writers, wage laborers for multimedia enterprises who require a high degree of training and experience to do their work. “Tech Workers” are a very different category of laborer and AI presents itself in a very different way then it does to Artists, it still amounts to a form of alienation, even if what a coder does can be rather deterministic when compared to a Graphic Designer, Storyboard Artist, or Scriptwriter.

We know that in the current term, this issue comes down to one of Intellectual Property, where most of the data required to create the abilities of DeepSeek and others regarding coding was appropriated in violation of the terms of the various open-source licenses. What do these licenses protect, though? Is it the various If, Then, Else code blocks, the declaration of Struts or Classes, or is it something else? These licenses protect the labor of intentioned design. This intentioned design is something that AI is not explicitly capable of doing. It produces end products but does very little to approximate design intention. That isn’t stopping the capitalist from attempting to do so, however, which is why models like the DeepSeek R2 exist. They exist to try and bridge the gap between generating raw end product and instead generating intentioned design. This creative labor, one of intention, is what produces things like Linux, like VS Code, without which there would be no data to train these models on. That is a large contradiction at the heart of the system. In a world still reliant on the wage system, this appropriation represents a kind of loss of wages, not in the short term, but in the long term should nothing change. Your examples are not tools that alienate the tech worker from their labor in the same capacity or way, and, if anything, they enhance the labor process. In the example of VS Code or similar Integrated Development Environments (IDEs), they streamline the production of code by providing you with linting tools, formatting tools, tab completion, and expandability through extensions to allow the laborer to enhance and tailor the production process to their desires and preferences. They do not replace the production process in the same way that generative AI does.

Generative AI for coding is closer to an actual enhancement of the labor process then it is a process of alienation, since there are many deterministic patterns of coding that can be predicted by things like Co-Pilot, but the capitalist doesn’t care about enhancing the creative output, they care about reduced labor costs. They’re willing to place lower skilled laborers at the helm of something like Co-Pilot to do the work of a more highly skilled laborer. Systems design and building is a skill set that requires design intention, and if you are not trained to build proper secure systems, you won’t be able to identify when Co-Pilot produces insecure code, since we know that these models are trained on all kinds of codebases, secure and insecure.

I know it sounds like I’m circling the same “ethical” topic here, but this is a line of critique consistent with our understanding of capitalism. If the Luddites were still an active group today, they would be burning down datacenters in protest over this clear and present threat to their labor. We must take this line, as this rhetoric stands to be more powerful and in alignment with other anti-automation positions within the working class, like those of the dockworkers from their strike just a few years ago. Even then, the general population contained a sentiment inline with this same sentiment we’re discussing here, one that doesn’t acknowledge the alienation being forged by this automation. “It would be more efficient if we implemented automation at the docks, then let the dockworkers continue to do their labor”, and in supporting that line we are supporting the displacement of the worker at the benefit of the capitalist. To me, there is no distinction between the two.

Intellectual property licenses protect property rights not intellectual labor. That’s like saying property rights protect labor and I don’t think anyone would agree with that.

I’n also not too concerned about the rights of labor aristocratic artists being infringed upon. If these artists and writers were creating their art for the sake bringing about positive social change then this would be worth considering but as the creative industry stands today they mostly work to support consumption ignorance and inequality.

Intellectual property licenses protect property rights not intellectual labor. That’s like saying property rights protect labor and I don’t think anyone would agree with that.

I’ll take that one on the chin, that’s fair. In my haste I am conflating concepts.

creative industry stands today they mostly work to support consumption ignorance and inequality.

Isn’t this simply everything under capitalism? I guess you can take a principled stance with your art, but you’ll more then likely die broke and homeless.

I think in general, the focus of the discussion needs to move away from “democratization” as phrasing. It seems like it gets used as a contrast to hegemony, but I’m not sure it’s actually what we’re talking about. Maybe it’s a pedantic thing (but in my defense, much of this discussion is very pedantic). My understanding of democratic would be that there’s an organized process going on. What seems to be the subject of discussion is more like free-for-all vs. corporate capture. How can we even talk about democratic processes in the context of living within a capitalist society, if we don’t control the means of production and if we are talking about individuals making choices, without even any kind of party direction or discipline behind it?

Perhaps this is where some of my confusion comes from as to the staunchness of “anti” that some people have about certain types of AI. Suppose the conclusion from all of this were to be that this technology in the generative form is currently inseparable from capitalism and its exploitation, and that nothing good can come from it while capitalism is the dominant economic mode. By what means are we supposed to oppose it and to what end? Boycotting works for some things, but it’s not even all that mainstream a tech to begin with and companies are pumping tons of money into it as aspirational tech for a market that hasn’t fully materialized yet. Creating an environment of shaming and fear around it, which seems to be the more common direction of those who fervently oppose it, only sends the message that if you do want to use it, then don’t tell anyone that you are doing so. It doesn’t send a message in clear and simple terms as to why it is worth boycotting and what outcome is going to be achieved in concrete terms and how this outcome can be achieved in the first place. Nor does it attempt to engage with, or investigate, the reasons why some are drawn to it in the first place. (This is not a criticism of you, OP, but a criticism of the general atmosphere of how anti-generative-AI often seems to look in practice.)

Perhaps in part because of its sometimes-intersection with NFT and crypto grifters in marketing, it seems to have become something of an anti-capitalist’s punching bag. A kind of easy target for directing ire at big tech and the problems of capitalism. But many of the problems that come up in relation to it already existed to some degree prior to AI, such as the tendency for the arts to be more about mass-producing things criticized as low quality than about anything inherently artistic. Or how the western internet (I can’t speak for elsewhere) has ridiculous competition and content mill patterns of behavior. Generative AI accelerates and exacerbates these problems, but it is not the originator of them. So it can come out a bit scapegoating in how it looks. A lot of energy expended in saying “this is too far” as if the other stuff hasn’t already been happening and leading to it.

I don’t want to trivialize the downsides, but at the same time, I’m concerned that it is much ado about nothing relative to the larger problems of capitalism that need addressing. That those who oppose generative AI are themselves getting swept up in some of the marketing and believing it a bit too much; treating its potential as faster-moving and larger than it is.

There are two things at play here, that, I think, need to be addressed. There is the methodology which can be applied to write a computer program that can “learn” a task, and then there is the interactive experiences that allow you to create your own output with the pretrained model. The former is a tool in all regards, one that is “neutral” in its composition because it can be applied in broad ways in many different disciplines. The latter — a model trained on terabytes of art — exists only to alienate the artist as the wage labor worker in an attempt to reduce the overall reproduction cost of their labor. These “neural networks” can be trained to do all kinds of tasks, and in 2014 a paper was released entitled “Attention is all you need” in which most of these generative tool sets sit upon. A great example of this undelaying technology being used outside the creative fields is the work being done on proteins.

The researchers trained and tested ProtGPS on two batches of proteins with known localizations. They found that it could correctly predict where proteins end up with high accuracy. The researchers also tested how well ProtGPS could predict changes in protein localization based on disease-associated mutations within a protein. Many mutations — changes to the sequence for a gene and its corresponding protein — have been found to contribute to or cause disease based on association studies, but the ways in which the mutations lead to disease symptoms remain unknown.